Proxmox VE and PBS on Hetzner dedicated server

Circumstances forced me to move all my self-hosted services to a location that does not depend on my living place. Someday, I hope I'll have all that hosted at home again, but now I want to describe my path of setting up a Proxmox VE on a Hetzner dedicated server together with Proxmox Backup Server and Tailscale with backups on Backblaze. This could look like a trivial task at first, but I faced some issues and obstacles I want to document here, for me to remember and for others to be informed.

I want to warn that this is not a full step-by-step guide. It is rather notes on the process and steps I followed.

Installation

There is documentation on the installation process at Hetzner. There are options, and I chose the first one - installing Proxmox VE on Debian. So, according to the docs, I booted a Rescue System and installed Debian with the installimage. Then I followed the guide from Proxmox Wiki to install Proxmox VE. There is a step where you first install a new kernel and reboot to activate it. I was forced to reboot the server twice to make it appear online again. Have no idea why.

Network

Now to the hard part. The initial plan was to have all LXCs in a single local network to allow internal communication. Also, the host and some containers should have public IPs for external access.

I reviewed a lot of network configuration options around the internet and chose a bridged setup with masquerading (NAT).

For the network I edited /etc/network/interfaces like this:

auto lo

iface lo inet loopback

iface lo inet6 loopback

iface enp5s0 inet manual

iface enp5s0 inet6 manual

auto vmbr0

iface vmbr0 inet static

address <Public IPv4>/26

gateway <Public IPv4 gateway>

pointopoint <Public IPv4 gateway>

bridge-ports enp5s0

bridge-stp off

bridge-fd 0

up route add -net <Public IPv4 gateway - 1> netmask 255.255.255.192 gw <Public IPv4 gateway> dev vmbr0

post-up echo 1 > /proc/sys/net/ipv4/ip_forward

post-up echo 1 > /proc/sys/net/ipv6/conf/all/forwarding

post-up iptables -t nat -A PREROUTING -i vmbr0 -p tcp -d <Public IPv4> --dport 21074 -j DNAT --to 192.168.50.3

post-down iptables -t nat -D PREROUTING -i vmbr0 -p tcp -d <Public IPv4> --dport 21074 -j DNAT --to 192.168.50.3

iface vmbr0 inet6 static

address <Public IPv6>/64

gateway <Public IPv6 gateway>

auto vmbr1

iface vmbr1 inet static

address 192.168.50.1/24

netmask 255.255.255.0

bridge-ports none

bridge-stp off

bridge-fd 0

post-up iptables -t raw -I PREROUTING -i fwbr+ -j CT --zone 1

post-down iptables -t raw -D PREROUTING -i fwbr+ -j CT --zone 1

post-up iptables -t nat -A POSTROUTING -s '192.168.50.0/24' -o vmbr0 -j MASQUERADE

post-down iptables -t nat -D POSTROUTING -s '192.168.50.0/24' -o vmbr0 -j MASQUERADE

Let's see what's going on in here.

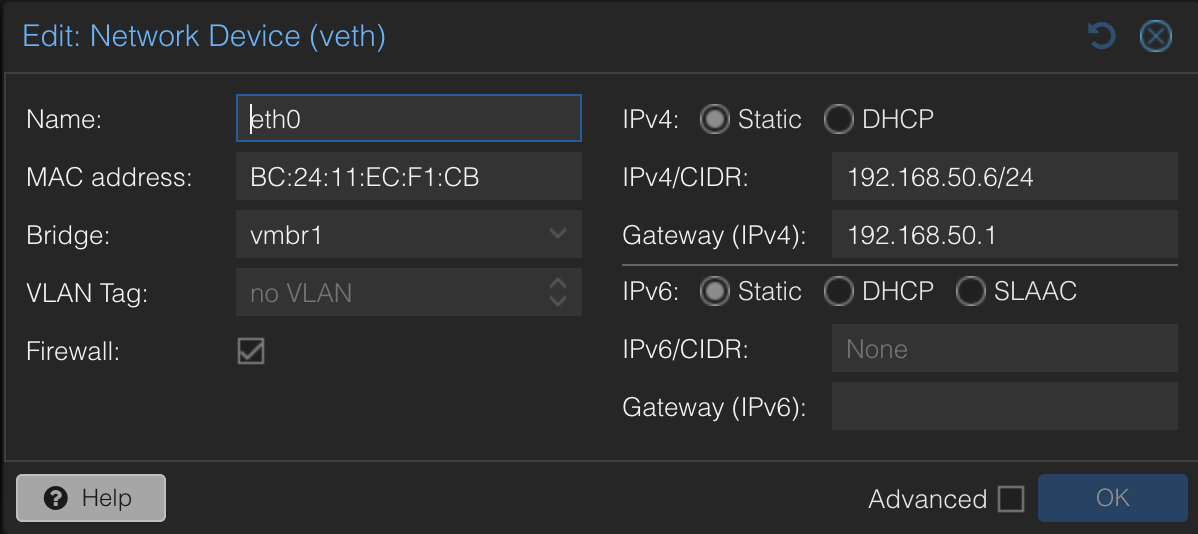

For the containers that don't require a public IP, I choose vbmr1 bridge, set some IP from the local IP range (192.168.50.x), and use host local IP as a gateway:

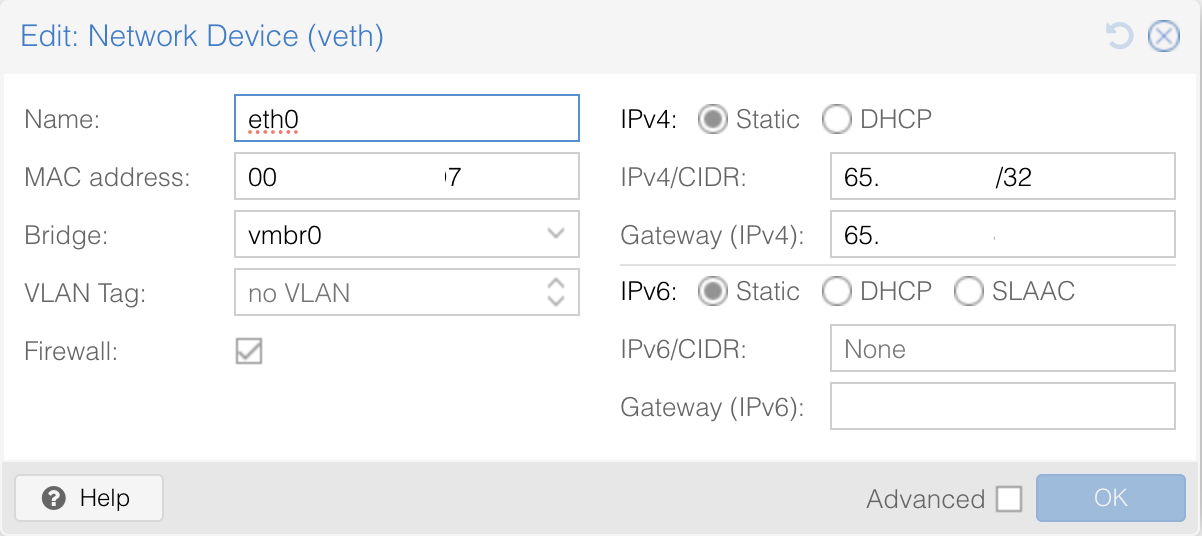

For LXCs that require a separate public IP, I choose the vmbr0 bridge, set the IP, gateway, and MAC address provided by Hetzner.

The route with the <Public IPv4 gateway - 1> is a route from the official Hetzner docs. That doc was updated while I was writing this post, and the line was removed from the example network configuration there. Some network configuration guides across the internet still have it, so I'm leaving it as is for now. If your public IP gateway is, for example, 67.11.34.23, the route will be

up route add -net 67.11.34.22 netmask 255.255.255.192 gw 67.11.34.23 dev vmbr0

The next lines enable IP forwarding across networks:

post-up echo 1 > /proc/sys/net/ipv4/ip_forward

post-up echo 1 > /proc/sys/net/ipv6/conf/all/forwarding

And here is an example of forwarding a port 21074 from the public IP to the local IP of a single LXC:

post-up iptables -t nat -A PREROUTING -i vmbr0 -p tcp -d <Public IPv4> --dport 21074 -j DNAT --to 192.168.50.3

post-down iptables -t nat -D PREROUTING -i vmbr0 -p tcp -d <Public IPv4> --dport 21074 -j DNAT --to 192.168.50.3

For the local network vmbr1 the next lines are needed to make Proxmox Firewall work properly:

post-up iptables -t raw -I PREROUTING -i fwbr+ -j CT --zone 1

post-down iptables -t raw -D PREROUTING -i fwbr+ -j CT --zone 1

Network security

Ports 8006, 22, and 8007 are opened on the Proxmox host in the Proxmox firewall, but explicitly closed in the Hetzner firewall. That way, I can use Proxmox VE and PBS web UI only from the Tailscale network, but, in case of an emergency or misconfiguration, I can open critical ports in Hetzner firewall to get access using server's public IP.

Public Reverse Proxy

I know it is not the best practice to install anything directly on a Proxmox host, but this looks like a perfect decision, as it already has a public IP address, and I can't unassign it because this is the only way to access the server in case of failure.

So I decided to install Caddy directly to a Proxmox Host and open ports 80 and 443 to it. Caddy handles requests and then proxies them to the local IPs of LXCs:

git.nicelycomposed.codes {

reverse_proxy 192.168.50.3:3000

}

Tailscale and Internal Reverse Proxy

They call it "bastion host". A single point of connecting to your internal resources that you don't want to expose publicly. I have an LXC for this with Tailscale and another instance of Caddy installed. Let's see how it works on an example.

I want to securely connect to the Proxmox VE web UI using a valid SSL certificate at https://pve.int.example.com.

First, to make Tailscale work inside an LXC, additional configuration should be made on the Proxmox host. LXC configuration file can be found at /etc/pve/lxc/<CT_ID>.conf. Those two lines should be added to the file:

lxc.cgroup2.devices.allow: c 10:200 rwm

lxc.mount.entry: /dev/net/tun dev/net/tun none bind,create=file

Then just restart the LXC.

Then, I installed Tailscale and Caddy on my "bastion host". Then I created a DNS record to point pve.int.example.com to an IP address of my bastion host in the Tailscale network:

A pve.int.example.com 10.11.12.13

Then, in the Caddy config, I proxied pve.int.example.com to the local IP of my Proxmox host and port 8006, using HTTPS and skipping TLS verification because of Proxmox's default self-signed certificate:

pve.int.example.com {

reverse_proxy 192.168.50.1:8006 {

transport http {

tls

tls_insecure_skip_verify

}

}

}

Now I'm able to point my browser to https://pve.int.example.com while connected to my Tailscale network.

As all ports are closed on the "bastion host" and it is not exposed publicly, the default ACME HTTP challenge will not work for Caddy to issue SSL certificates for internal resources. DNS challenge should be used instead.

I can add other internal resources hosted on other LXCs in the same way:

dockge.int.example.com {

reverse_proxy 192.168.50.4:5000

}

element-admin.int.example.com {

reverse_proxy 192.168.50.8:8080

}

Proxmox Backup Server

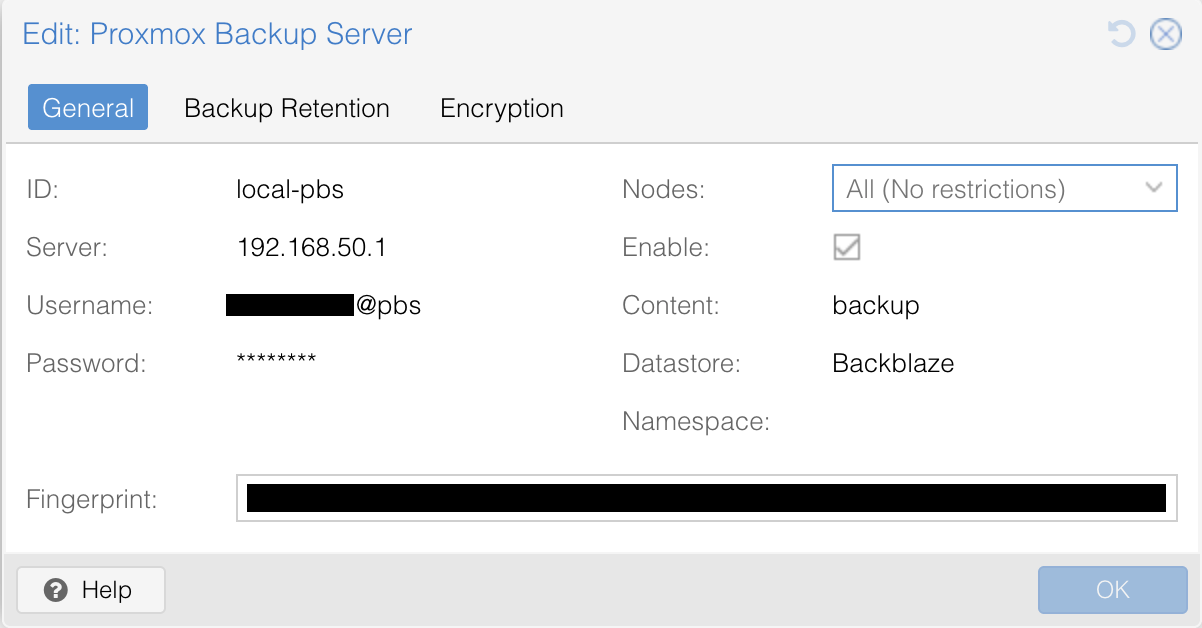

It was the simplest part. I know this is not the most recommended method, but my Proxmox Backup Server is installed on the Proxmox VE host. After installing it and configuring it to use Backblaze S3 storage, I just added it as a storage to Proxmox VE using the local IP of the host.

Conclusion

Have no idea why everyone wants to write a conclusion for each post nowadays. It looks like a forced conclusion to a school physics problem in most cases: "Solving this problem of moving trains, we found out that trains can move".

Have a nice tinkering.