Daily Matrix and Mastodon backups with zero downtime

As I now run my own Mastodon instance where anyone can request registration, there are goals to achieve with it. For example, the Mastodon Server Covenant. There is "Daily backups" among other requirements, and today's story is about that. It involves Proxmox Backup Server, Ansible, and Tailscale as the three pillars of "Daily Matrix and Mastodon backups with zero downtime."

The simplest way to back up my Mastodon and Matrix servers, which run in a separate LXCs, was to set up snapshot-type backups on the Proxmox datacenter level and be happy, but snapshotting the database files is not the right way. You can catch your Postgres in the middle of the transaction and leave it corrupted after the restore. The right way was to make a dump of the databases, for example, with pg_dump, because "It generates a consistent snapshot of the database at the time the command is executed, even if the database is being used concurrently, without blocking other users."

But what about the other files? Configuration files, secrets, media storage? Sure, we can rsync them off the server along with the database dump and be happy, but what about backup versioning, retention, and deduplication? As I already had a Proxmox Backup Server to handle those, my plan for backing up a server was simple:

- Make a database dump to the file system of the server

- Make a snapshot-type backup of the server to the PBS

This way, I'd have consistent daily backups with a database dump and all needed files. And the restore procedure would consist of the same two steps, but in reverse:

- Restore the server from PBS

- Restore the database from the dump on the server's file system

I'm not claiming to be an inventor of something new; I just think it is a good way. Loosing or corrupting several media files during this process is far less critical as missing database records or ending up with a corrupted database.

So I ended up with two separate tools to make a backup. First is a pg_dump CLI tool on the server, and the second one is a Proxmox Node to perform an LXC backup. Syncing those two, relying only on the time and delays, felt like a bad idea, so I decided to use Ansible from another Proxmox LXC to connect to my servers, make a database dump, and trigger a Proxmox backup job.

Tailscale SSH

To make that work, I needed an SSH connection between my "guard" server with Ansible running and the other two servers to back up. Sure, I could generate new SSH keys, make an exchange, but I already had Tailscale on my Proxmox guests, so why not use Tailscale SSH for that?

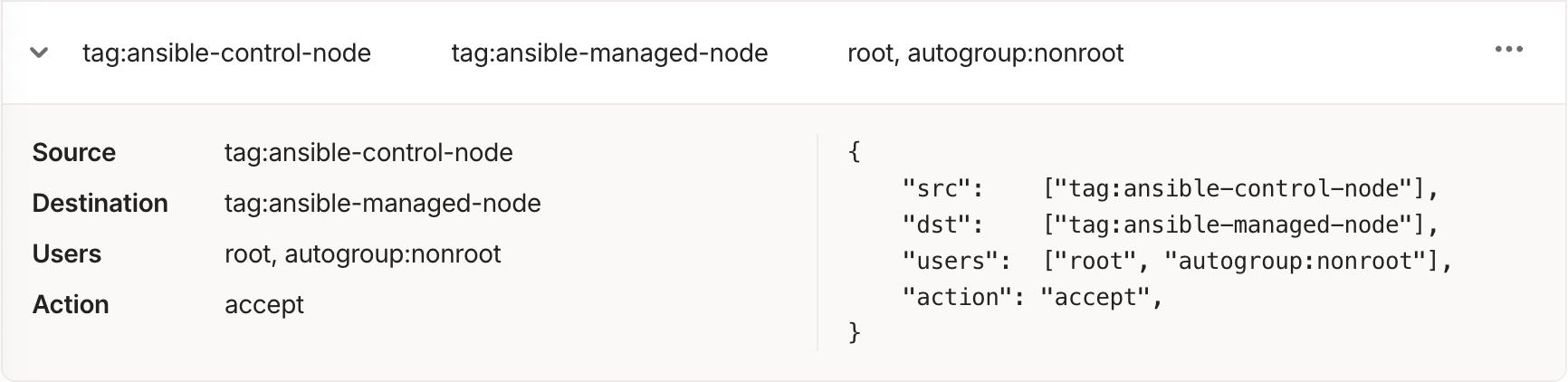

The hardest part of Tailscale SSH configuration is to configure access rules, because Tailscale SSH between Tailscale devices is disabled by default. To enable it, I created two ACL tags in Tailscale:

- "ansible-control-node" to assign to the "guard" server

- "ansible-managed-node" to assign to the Matrix and Mastodon servers

Then, in the Tailscale Access Control, I just created a new rule for Tailscale SSH to allow SSH connection from "ansible-control-node" to "ansible-managed-node".

Playbook

On the "ansible-control-node", I installed Ansible Core 2.19 (at the moment of writing), created a playbook.yaml and an inventory.yaml.

After some research and documentation reading, my playbook.yaml ended up with two plays.

Database dump

- name: Database dumps

hosts: postgres

remote_user: root

tasks:

- name: Dump all databases

ansible.builtin.command: pg_dumpall -c -f /var/lib/postgresql/db_dump_all.sql

become_user: postgres

become: true

hosts here is a group containing servers with installed PostgreSQL.

I used the ansible.builtin.command task to create a dump of all databases on the server to a file, becoming a postgres user. This system user is created by default in Ubuntu or Debian when installing Postgres from the apt repository.

Proxmox backup

- name: Backup Proxmox nodes

hosts: localhost

tasks:

- name: Backup matrix and mastodon node

community.proxmox.proxmox_backup:

api_user: ansible@pve

api_password: ***************

api_host: ***.***.***.***

storage: pbs # PBS storage ID

backup_mode: snapshot

retention: keep-monthly=6, keep-weekly=4, keep-daily=7

mode: include

wait: true

wait_timeout: 240 # Wait fro x seconds before throwing a timeout error

vmids: ## Proxmo guest IDs to backup

- 109

- 111

hosts here is a localhost because we will trigger the Proxmox backup job through the Proxmox API.

I created a separate user for this task on my Proxmox VE, giving him the VM.Backup role for selected guests and Datastore.Allocate plus Datastore.AllocateSpace for the PBS storage.

Schedule

The last step was to run this playbook daily. I used a cron job. As the path to apps installed with Python was added to the PATH environment variable through the .bashrc, it is not available for the crontab, so I used a full path to the ansible-playbook. Use whereis ansible-playbook to get the full path.

0 1 * * * /root/.local/bin/ansible-playbook /root/ansible/playbook.yaml -i /root/ansible/inventory.yaml > /var/log/backups.log 2>&1

This job will be executed every day at 01:00 and write the result of the execution to /var/log/backups.log. The log file will be rewritten in this case. If you want to append the log on each job execution, change > /var/log/backups.log to >> /var/log/backups.log.